Explainability: “The ability to make something understandable, especially by clarifying how decisions or outcomes are generated.”

The situation – your GenAI chatbot provides an answer that seems off-base. Without understanding the “why” behind its output, it’s difficult to know if the information is reliable or if the solution is truly optimal. Here’s where explainability in GenAI becomes critically important.

Explainable AI (XAI) allows us to understand the reasoning behind a GenAI system’s decisions. This transparency is critical for businesses because it fosters:

- Trust: When you can see the logic behind the AI’s output, you can be confident in its recommendations. This allows for informed decision-making and a stronger foundation for collaboration between humans and AI.

- Accountability: XAI helps identify potential biases or errors in the model’s training data. This empowers businesses to address these issues and ensure the AI is operating ethically and fairly.

- Improved Workflow Integration: By understanding the AI’s reasoning, businesses can better integrate AI outputs into existing workflows. This optimizes processes and ensures smooth collaboration.

xop.ai and resolve.ai: Unveiling the Black Box

In collaboration with Resolved.ai, we’ve developed game-changing insight that demystifies the decision-making process of your GenAI solution. Here’s what we can do:

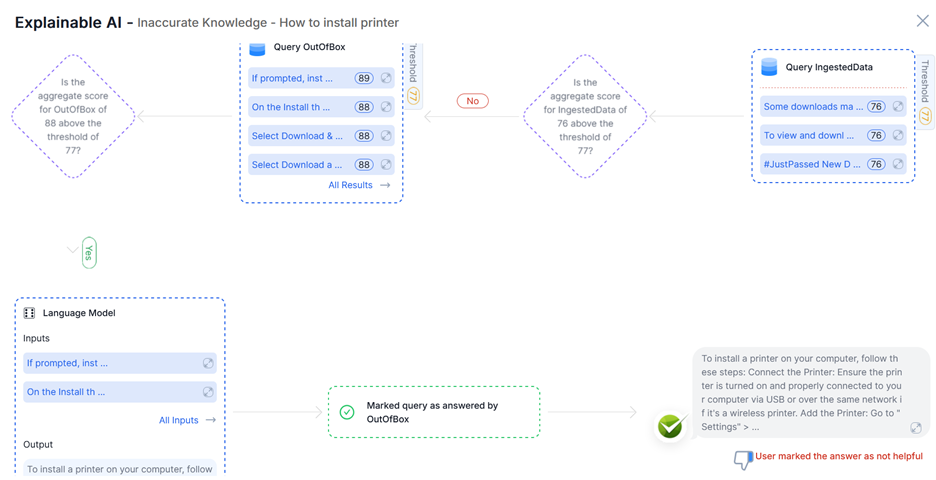

- Provide a Schematic View of the Decision Tree: Visualize the path your GenAI takes to arrive at an answer. This transparent flow chart allows for easy comprehension of the reasoning process.

- Information Source Identification: Gain insight into the specific sources used by the GenAI to develop its answer. This could include user-curated knowledge bases, expert opinions, or external data sources.

- Source Prioritization Rationale: Understand why the GenAI prioritizes certain information sources over others. The dashboard may explain why user-vetted knowledge takes precedence over unverified internet sources.

- Source Aggregation and Combination: See how the GenAI combines information from various sources to formulate a response. This helps understand the overall weight each source carries in the final answer.

- Discarded Information and Justification: Identify data points the AI disregarded and why. This is crucial for pinpointing potential biases or irrelevant information within the system.

Why is this Helpful? This level of transparency empowers you to:

- Validate the data: Verify if the information used is reliable and the reasoning sound.

- Identify potential blind spots: Spot missing information or hidden inaccuracies or conflicting information within the data the AI processed.

- Refine the GenAI Solution: Leverage insights from the dashboard to further improve the accuracy and effectiveness of the AI.

Explainable GenAI is no longer a luxury – it’s a necessity for businesses to fostering trust in their AI chatbots. With xop.ai and https://rezolve.ai, you gain a transparent window into your GenAI’s decision-making process, allowing you to harness its power with confidence.

Want to learn more about our GenAI solutions? Book a time with Matt. http://calendly.com/mattruck